12 minutes of reading

Introduction to progressive web apps

A progressive web app (PWA) is a standard introduced by Google that was eagerly approved by Microsoft and reluctantly adopted by Apple. It has gained massive popularity among global leaders in online technologies. Companies such as Pinterest, Uber, Airbnb, Spotify, Aliexpress, OLX, and Forbes, which have already field-tested the abilities of PWA technology to boost user engagement, proved that it’s the best answer to the challenges of the mobile world.

Now, we can say that this technology is here to stay. For the last seven years, PWAs kept answering the needs for faster, leaner, and more rewarding user experience. They provide you with the flexibility to meet the rising expectations of internet users. In a fiercely competitive modern eCommerce environment, PWAs capture the interest of any customer-focused eCommerce professional.

We present to you the second edition of this PWA eBook that was prepared by the digital commerce experts at Cloudflight, formerly Divante. The first edition was a complex guide that served many eCommerce professionals and developers as a reference point. It’s been revised, updated, and redesigned to match the new challenges our industry is facing. Immerse yourself in the world of progressive web applications and the fast, reliable, and engaging user experience they provide.

01

What is a progressive web app?

A progressive web app is a type of application developed using web technologies such as JavaScript, CSS, and HTML. It closely resembles a regular web page in appearance and behavior. PWAs are easily discoverable in search engine page results and can be shared via links. What sets PWAs apart is their ability to provide functionalities similar to native mobile apps. They can operate offline, deliver push notifications, and leverage device hardware in a manner consistent with native apps.

The definition of a PWA

A progressive web app is a type of web application that can be used as a web page and mobile app on any given device. PWAs are created by fulfilling the majority of requirements listed in this checklist.

It doesn’t sound like a very precise definition of what a PWA is does it? PWAs seem to be a modern fusion between the mobile and desktop worlds, but, since the term doesn't suggest any particular implementation, it may be challenging to craft a dictionary definition of progressive web apps.

According to Google, the PWA standard is an approach focused on the user experience, but the rest of the progressive web app definition intensifies confusion. When is a website fast and engaging enough to call it a PWA? Is it crucial to install it on the user's home screen? Or maybe it’s all about an immersive full-screen experience? In short, what exactly makes a web app a progressive web app?

Google, probably deliberately, doesn't clearly define a PWA. The idea of an open web seems to conflict with a strong standard defined by a central player in the market. The giant can't allow for the suspicion that PWAs, introduced by the team from Mountain View, force web owners to use Google’s own standards, technologies, or implementation methods. Pointing out a general direction or, better put, a general philosophy to building online touchpoints is a safer choice than forcing a specific method of making them.

Because of this, Google introduced the PWA standard and delivered Lighthouse. Lighthouse is an open-source tool that enables developers to audit a web app for PWA features.

Check your eCommerce site with Lighthouse

Lighthouse, an open-source automated tool for improving the quality of your progressive web apps, eliminates much of the manual testing that was previously required.

You can check your eCommerce site with the Lighthouse tool in terms of PWA compliance and also performance, accessibility, and best practices.

“If you’re talking to the business people, tell them about the return on investment you get from progressive web apps. If you’re talking to the marketing people, tell them about the experiential benefits of progressive web apps. But if you’re talking to developers, tell them that a progressive web app is a website served over HTTPS with a service worker and manifest file.”

Jeremy Keith -

Adaicto

The term “progressive web app” is more of an umbrella definition than the name of a specific technology, but despite that, it’s relatively easy to point out what makes a web app a PWA. To be considered a PWA, websites must meet at least 75% of the requirements from the checklist mentioned before. However, three of them, according to Jeremy Keith's technical definition, are crucial.

HTTPS

The service worker, a core technology of PWA technology that delivers offline functionality and ultra-fast performance, requires a secure environment to run. Enabling HTTPS on the server is a must-have in creating a PWA. That shouldn't be a problem since, for some time now, the Chrome browser already marks all websites not using that protocol as insecure. Providing the HTTPS protocol has become commonplace. Most host providers offer easy-to-use in-house tools to switch over to HTTPS. In other cases, the plugin “Let's encrypt” may be helpful.

A web app manifest

A web app manifest is a simple JSON file filled with all the necessary PWA metadata, like the app name, language, icon, start URL, orientation, scope, theme, color, etc. It tells a browser how to display the app.

PRO TIP: It’s worth verifying whether or not the web app manifest is set up correctly with Lighthouse, which is available in the Chrome DevTools.

An exemplary manifest.json file for a progressive web app:

{

"short_name": "Name",

"name": "NM",

"icons": [

{

"src": "/images/icons-S.png",

"type": "image/png",

"sizes": "192x192"

},

{

"src": "/images/icons-M.png",

"type": "image/png",

"sizes": "512x512"

}

],

"start_url": "/name/?source=pwa",

"display": "standalone",

"theme_color": "#3367D6"

}

💡 PRO TIP: Creating a web app manifest should not be a problem since it can be generated automatically with, for example, PWABuilder or Web App Manifest Generator.

Service workers

This core PWA technology is a JavaScript file that runs in the background in the browser and is directly responsible for providing all core features of the PWA, such as push notifications or background synchronization. Developers can use them in many different ways, but, in general, they allow them to control how the browser handles network requests and asset caching.

The web standard used to create service workers is now Google WorkBox. That set of libraries and Node modules enables developers to wrap the boilerplate code with methods that they can use to implement service workers. WorkBox is a well-defined and easy-to-use tool to apply various caching strategies, like, for example, precaching, runtime caching strategies, and offline support. By using it, it’s possible to create a well-functioning PWA with less code and a lower risk of errors.

03

PWA characteristics

PWAs are fast

Site speed is crucial for every online business no matter if it’s a store or a news site. Online, primarily mobile, consumers are very impatient. Half of mobile visits are abandoned if the loading of the page takes more than three seconds.

According to a study by Portent, “a site that loads in 1 second has a conversion rate 3x higher than a site that loads in 5 seconds.”

Conversion rates are directly related to page load time, which forces brands to optimize their websites for speed and efficiency. PWAs guarantee both. Once loaded, they react to users' behavior smoothly and without the need to be reloaded.

PWAs are reliable

The reliability of progressive mobile apps is based on their independence from an internet connection. A PWA can work offline and provide a stable experience no matter the quality of the connection. It allows users to stay engaged as long as they want. They can continue browsing a product catalog or even add items to a cart without an internet connection.

PWAs are engaging

A PWA has access to the device's features, which makes it able to enrich the user experience and avoid problems with re-engagement. On top of that, PWAs are conveniently accessible directly from a browser and easy to pin to the user’s home screen. Brands can send their consumers push notifications with special real-time offers, updates, and reminders of cart abandonments, which increases customers’ loyalty.

04

PWA pros: the key advantages of progressive web apps

Being fast, reliable, and engaging translates to multiple advantages for anyone working with or using progressive web apps. Regardless of whether we talk about benefits for users, developers, or businesses, everyone wins with PWAs:

- Mobile-first approach: PWAs are the direct answer to mobile users’ needs and allow businesses to build web solutions that first and foremost work excellently on mobile devices.

- All in one: With a PWA, it’s possible to develop a web page and app at the same time with lower costs and a shorter time to market. On the other hand, users don’t have to visit app stores to get the app because they can install it directly from the browser.

- Short loading times: PWAs load two to three times faster than responsive web pages, so users don’t get annoyed with loading bars and get instantly engaged.

- Native-like user experience (UX): Push notifications, an icon on the homepage, and offline access are some of the native app features that come with PWAs.

- Offline capacity: A lot of customers suffer from low internet connectivity. A PWA allows them to continue browsing without obstacles. A PWA’s offline readiness also supports traffic peaks on major shopping days, like Black Friday.

- SEO-friendliness: A PWA can be optimized according to Google’s guidelines and indexed by Googlebot, which makes a PWA the right choice for many.

Some business factors are, however, also worth noting. With progressive web apps, developers can focus on one project that supports all operating systems and browsers instead of building apps for individual devices. This approach is more comfortable than covering all marketing channels with separate online services, although it comes with some design and business challenges.

Jump to “Benefits of progressive web apps” to learn more about how PWAs solve the problems of users, businesses, and developers.

05

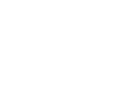

A short history of PWAs

The beginning of PWA history is as early as the year 2000 and the creation of XMLHttpRequest. This technology gave us the chance to retrieve data from a URL without having to do a full page refresh. Five years later, we got AJAX, a technique for creating better, quicker, and more interactive web applications with the help of XML, HTML, CSS, and JavaScript. With AJAX, web applications can send and retrieve data from a server asynchronously in the background without interfering with the display and behavior of the existing page.

The era of native apps

The idea of a PWA wasn't invented by Google or Apple, but it was Steve Jobs who first presented the concept in front of the world during the introduction of the iPhone in 2007. However, Jobs wanted developers to build apps using standard web technologies to increase the popularity of the iPhone.

The idea of "universal apps" was frozen for almost a decade, and that time belonged to native apps that completely redesigned the way we use the internet. Native apps dominated the mobile network and helped build Google and Apple’s power on mobile. Meanwhile, web owners who wanted to make their data more accessible began to switch to responsive web design (RWD). With this approach, web pages display correctly on a variety of devices and screen sizes by using proportion-based grids and media queries in CSS. The PWA-specific approach had to wait for its moment.

PWAs strike back

In 2015, Frances Berriman and Alex Russell observed a new class of websites that were providing a better user experience than traditional web applications. All of these applications were characterized by one common feature: they gave up browser tabs to live on their own while still maintaining their ubiquity and linkability.

They called this new class of apps “progressive web apps.” A year later, during the Google IO conference, Eric Bidelman, Senior Staff Developers Programs Engineer, introduced progressive web apps as a new standard in web development.

After their official debut, PWAs were perceived as “The Next Big Thing” in the tech world. However, just like it was with the "Year of Mobile," nothing changed overnight, even after Google and Microsoft forged an alliance to accelerate PWA adoption.

Google and Microsoft have been rivals in the technological arms race for decades, but when it came to the PWA standard, they decided to work side by side. Microsoft's decision seems especially worth noting. The giant from Redmond was able to take a step back and sacrifice its idea of a Universal Windows Platform to make room for PWAs, a concept introduced by Google.

In 2018, Apple announced their support for PWAs, and that opened the way for its mass adoption.

06

Progressive web apps vs. native apps vs. hybrid apps

PWAs and native apps aren’t the only way to create a smooth cross-platform user experience. With tools like React Native, Native Scripts, Flutter, or Ionic, there’s a third way: hybrid apps, also called cross-platform apps.

Native apps vs. hybrid apps

Modern frameworks allow for building apps that provide almost-native performance but without the necessity of writing three different code bases. This is what we call a hybrid app. Developers write the application once, and it’s available on three operating systems. For native apps, there is a need to use Objective-C (iOS), Java (Android), and, in the past, C# (Windows Phone). The cross-platform approach is less time-consuming, and the costs of writing a hybrid are incomparably lower.

A well-written and optimized cross-platform app should not differ from a native application, but in more complex projects, like games or expanded programs, it may be visibly slower or even suffer from serious lag. It’s also difficult to support Android-specific or iPhone-specific functionalities with hybrids.

Hybrid apps can be a reasonable choice, especially when the project is relatively modest and the budget limited. Otherwise, a native app will be a better option. They’re written in languages specific to Android and iOS, which guarantee the best possible performance and compatibility with a smartphone's functionalities. Unfortunately, it also means that development takes more time and money and that the app is a lot harder to distribute and update.

Progressive web apps vs. native and hybrid apps

PWAs are still trending in the IT world, and there’s a good chance that they will soon replace native and hybrid apps. They are incredibly fast, in contrast to hybrid apps, and they use a single code base between platforms, unlike native apps.

Another notable advantage of progressive web apps is the significantly lower cost of development and the shorter time to market. But the list of PWA benefits doesn't end there. If you want to learn more, jump to the “Benefits of progressive web apps” chapter of this eBook.

07

PWA statistics and implementation results

PWA eCommerce is ranked 21st in the eCommerce Trend Radar list of trends. In over seven years on the market, progressive web apps have repeatedly proven that they deliver a tangible efficiency boost and better business results. Here are a couple of case studies that track PWA statistics and monitor site performance after implementing PWA technology.

- Kubota launched their eCommerce PWA which resulted in a 192% growth in daily visitors and 26% growth in average monthly visits.

- Implementing PWA in Yope increased the conversion rate by 70% and decreased the time to first byte by 1100%.

- The Starbucks PWA has doubled the number of their daily active users.

- Deploying PWA at Lancôme made their website 50% faster than the previous one, and Lancôme's mobile sales have increased by 16% year over year.

- With a PWA, the page-load performance of the Housing.com site increased by over 30%, and the average time-per-session increased by 10% across all browsers.

- Time spent on the Pinterest PWA site is up by 40% compared to the old mobile web experience, user-generated ad revenue is up 44%, and core engagements are up 60%.

- In a short time, the DW Shop achieved 75% of its traffic from Instagram with the use of PWA technology.

08

PWA examples

Progressive web apps were implemented by companies like Pinterest, Uber, Airbnb, Spotify, and Aliexpress, but here we’ll share with you some more relatable PWA examples. These are the progressive web app examples we had hands-on experience with, and we can vouch for the numbers mentioned in the case studies.

Staples

Staples is the second largest eCommerce retailer in the world, right behind Amazon.com. Over the years, this company grew both organically and by the acquisition of other eCommerce entities while still continuing to provide online services. At some point, Staples had to unify the user experience across all touchpoints to provide a consistent brand identity.

It wasn't an easy task since Staples' online services were based on multiple, highly customized systems written in .NET and Java. Changing all of them would be time-consuming and demanding, so the eCommerce team decided to build a unified user interface (UI) for two back ends available in the Staples technology stack.

The company considered the development of a native mobile app, a new front end, and various headless solutions. However, it eventually became clear that a progressive web app was the best option.

The idea was to implement the new PWA front end and integrate it via API with Genesis and Netshope-based back ends. The final product delivered features like barcode scanning and offline ordering, which enabled Staples to improve the overall user experience of mobile users and, at the same time, make it more consistent.

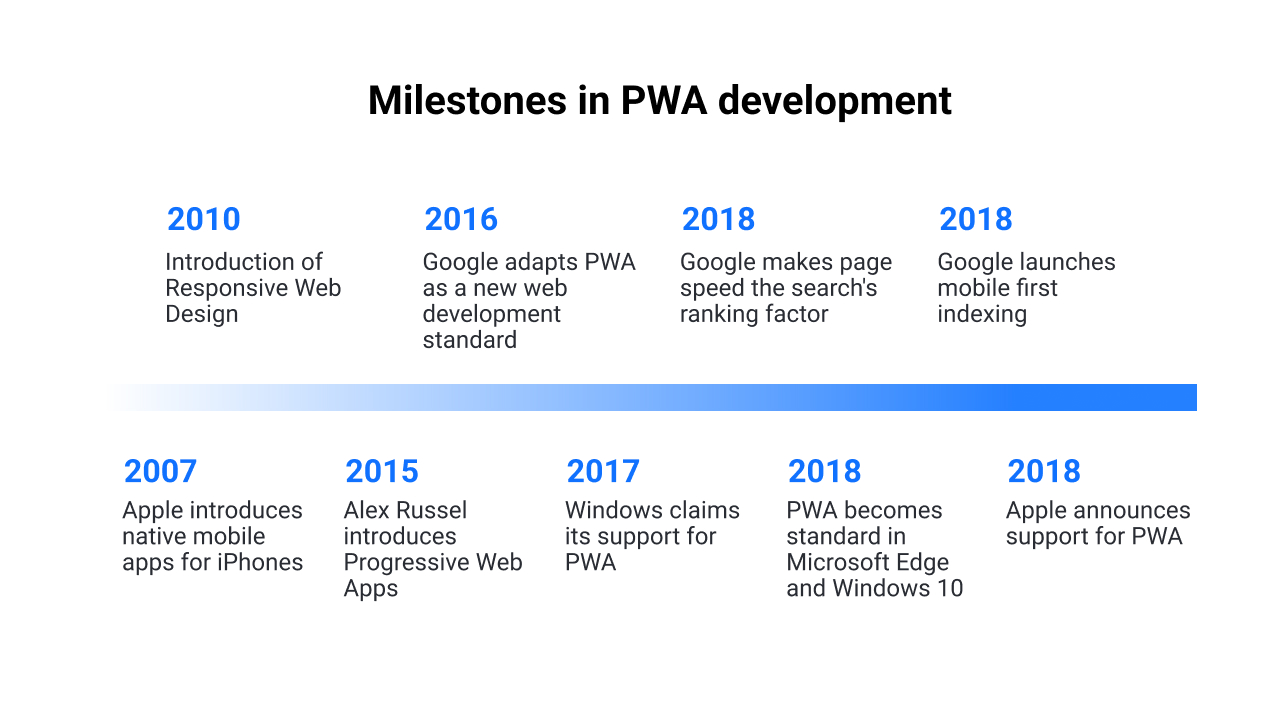

Yope

Yope is a well-known Polish brand that specializes in producing beauty and cleaning products that are natural, eco-friendly, and vegan. They decided to embrace PWA technology to enhance their online presence and provide an exceptional shopping experience. The goal was to develop a new version of their eCommerce platform that would incorporate cutting-edge features and captivate their customers with a visually appealing design.

Yope now boasts a user-friendly PWA online store that promotes the digitalization of their business, ensures the satisfaction of their customers, and facilitates revenue growth. This strategic investment in their future has yielded remarkable results, with an impressive 70% increase in overall conversion rate. The desktop conversion rate soared by 83% while the mobile conversion rate experienced a substantial growth of 64%.

Mirka

.png?width=3100&height=2001&name=CS_MIRKA_HERO%20(1).png)

Mirka is a renowned global leader in surface finishing technology that specializes in offering a wide range of sanding solutions for the surface finishing and precision industry. Mirka operates through 18 subsidiaries situated across Europe, the Middle East, North and South America, and Asia. The company's headquarters and primary production facilities are based in Finland. Mirka's commitment to international markets is evident because more than 97% of their products are exported and sold in over 100 countries worldwide.

To effectively manage its extensive network of partners, Mirka recognized the need for a robust partner portal that would ensure brand consistency and facilitate seamless communication with its partners. In November 2020, Mirka successfully launched its Partner Portal specifically tailored for the UK and German markets.

Bottom line: PWA technology is mature

In eCommerce, where the user experience is essential, there are very few things more important than providing your users with a quick, reliable, and engaging app to finalize their purchase. That’s why, in the modern world, we’re surrounded by PWAs. Progressive web apps have caught on, and they deliver tangible results.

Now, let’s take a closer look at the role of progressive web apps in a mobile-first world.

Switch chapters

Got a question?

If you’re reading this, you’re probably getting ready for a new front end for your eCommerce system. Let’s discuss your options.

Divante 2024

.png?width=305&name=MicrosoftTeams-image%20(15).png)